The k-armed bandit

Contents

12.2. The k-armed bandit#

The “\(k\)-armed bandit”, or “multi-armed bandit” problem is a classic problem in reinforcement learning. We like it because it’s both interesting and fairly readily applicable to everyday life, but it also includes many of the key tenets of RL problems: a tradeoff between exploration and exploitation, a set of strategies that are not obviously optimal but one of which might be, and a problem space that we can define but also imagine how to change and adapt as new situations or applications arise. Also, it’s fun and frequently involves pictures of an octopus gambling, so really there’s nothing not to like.

Intuition#

Suppose you want to order food from a restaurant (obviously, because your attempts at reinforcement learning to get better at cooking have finally led you to the optimal one, which is have someone else do it). How do you decide which restaurant to choose? We can simplify your choices into two broad options:

Explore: Try something new

Exploit: Stick with what you know

Exploration vs. exploitation is a key tension in reinforcement learning – and, of course, in our own lives. Whether we’re choosing between restaurants or careers or life partners (all equally important choices, of course), we are always navigating the tradeoff between sticking with what we know and trying something new that we might like more, but risk of liking less.

The tension between exploration vs. exploitation has been written about extensively, not just specifically in the field of RL. One of this textbook authors’ favorite papers on the subject comes from organizational learning and adaptability, and is described in the beautiful 1991 paper, “Exploration and Exploitation in Organizational Learning,” by James C. March. (In related news, academics really do come up with the most creative paper and book titles, don’t we?)

We can further refine our behavior rules, or strategies, within this broader framework of exploration vs. exploitation. For example, we could explore in a number of different ways:

Randomly (e.g., select a restaurant at random within a certain range of where we are located)

Based on Yelp reviews (e.g., rank the options within a certain radius by the mean rating weighted by the number of reviews)

Based on personal recommendations from others (e.g., try a recommendation from your closest friend first, then your second-closest, and so on, making sure to tell your friends precisely how they are ranked as you go)

It’s a little less interesting to examine exploit strategies, as they tend to be “keep doing the same thing as before”, though that “same thing” could be:

Keep going to the exact same single restaurant over and over

Keep going to the same three restaurants in a rotating fashion

Choose one of your top three favorite restaurants at random each time you are hungry

Already, we can see some exploration strategies (e.g., randomness) is sneaking into our exploiting in the above examples. Indeed, many more complicated strategies can arise when we do some mix of exploration and exploitation, such as:

Go to your favorite restaurant until you are sick of it, then pick an exploration strategy

Explore three different restaurants, then stick with the one you like best

Explore until you find a restaurant that exceeds some satisfaction threshold for you, go to that restaurant until you get sick of it (i.e., it goes below that threshold), then explore again until you find something that exceeds your threshold, and go to that until you get sick of it, and so on

As you can see, these strategies can get quite complicated quite quickly. How do we know which strategy to follow? Which one will get us to our optimal outcomes? One way to find out would be to try all the strategies for a certain number of meals and see how it turns out. In addition to that taking a very long time, and a lot of money, it also poses another question: what strategy of strategies should we follow? That is, should we implement each of the nine strategies listed above for a certain amount of time? Should we skip “random” and/or “never change”? What else might we be missing? (It’s a reinforcement learning problem all the way down!)

In order to save our time and our money – though perhaps to the dismay of local restaurants – we can use a computer to simulate these strategies in a problem space we define, and then see which one gets a higher reward. Enter the \(k\)-armed bandit!

Welcome to fabulous Las Vegas!#

The word “bandit” in the multi-armed bandit problem comes from the Vegas term for a slot machine: a one-armed bandit. (If you haven’t done much gambling in your life, it’s called as such because you tend to lose a lot of money, on average, after you pull the “arm” a whole bunch.)

Fig. 12.3 One-armed bandit#

When we just have one arm to pull, things are not terribly interesting: our expected payoff is based on whatever the expected value of payoffs is in the slot machine. We face something of a choice, in that we can think about how many times we want to pull the lever to learn about what the underlying distribution of payoffs is, or we could decide not to bother and go spend our money on something else (which then becomes an explore vs. exploit problem itself).

But things get a little more interesting if we find ourselves standing in front of two slot machines, or a two-armed bandit, or three slot machines, or a three-armed bandit. Suppose we’re also committed to at least trying to make some money from these machines. What’s the best way to optimise our payoffs in this case? That is, given that we do not know the true underlying distribution of payoffs for each machine, or even if the payoffs are the same, similar, or wildly different, what arm-pulling strategy will earn us the highest payoff?

Fig. 12.4 Three-armed bandit#

Already you may have some intuition that you’re going to want to (likely) explore the three bandits a bit to see if you can figure out which one has the highest payout, if any, and then exploit that particular bandit. But even then, our “optimal” strategy is going to vary, including whether or not this is even optimal, depending on how many trials we have, how risky it is if we don’t make any money (or even lose money, which is a seriously cruel but plausible outcome if anyone is interested in higher-stakes slot machines), and what, if anything, we know or suspect about the payoffs of the three bandits. (For example, has someone come up to us and told us one is “lucky”? Will we let that inform our decision?)

We could imagine working out by hand what strategy to go for based on a few assumptions we might make in answer to the above question. But as the number of bandits gets larger – 4, 5, 6, … up to \(k\) – this calculation becomes more difficult and time-consuming – it will certainly take more trials to get any information about the underlying distribution of payoffs across these slot machines, but it also will allow for increasingly complicated strategies.

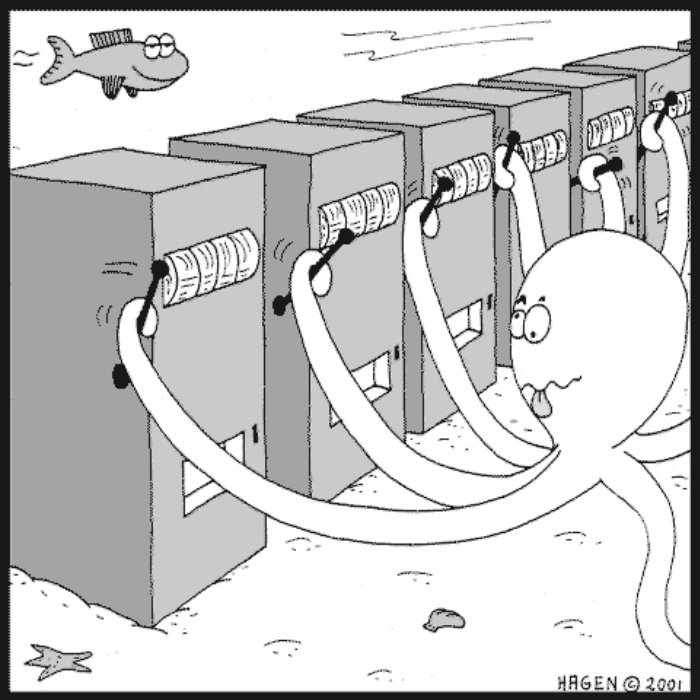

You can imagine the delight, then, when scientists studying reinforcement learning discovered the below cartoon by Hagen Cartoons. While the original title of the cartoon is “compulsive gambling”, of course, we all decided we loved it because it perfectly summarizes the challenges faced by anyone in a problem where it’s not obvious what the payoffs for each option are going to be, and there’s finite time to figure out which one(s) are best – and thus there surely must be an optimal strategy or set of strategies that will help us optimize our payoffs over many choices characterized by uncertainty. (Thanks, Hagen Cartoons! Also, if you can’t get enough images like this, a quick google image search will reveal a treasure trove of spinoffs since.)

Fig. 12.5 An octopus facing the multi-armed bandit problem (Source: Hagen Cartoons)#

What’s an octopus to do?#

Our octopus’s situation is currently as follows:

Each slot machine has an underlying reward distribution

We (the researchers, the casino, etc.) know the reward probabilities for each machine, but they are unknown to the octopus (the gambler, the ant on the beach, the hungry person wandering New York City)

The gambler’s objective is to maximize total expected rewards over a finite number of trials (or \(n\) time steps)

What should the octopus do? Building from our earlier discussion of choosing restaurants, we can start to outline some possible strategies that strike different balances of exploration and exploitation, e.g.:

Pure exploitation – pick a machine at random and pull only that lever over \(n\) trials

Pure exploration – move randomly between the machines over \(n\) trials

Smart exploration – explore across machines for some number of trials that is (possibly much) smaller than \(n\), and then exploit the one that seems to have the highest payoff for the remaining trials until you reach \(n\).

The smart exploration option is where things can get really complicated, of course, and are generally the basis for the more interesting strategies we can explore in reinforcement learning. In fact, two of the three most common strategies that tend to be implemented when first building an RL simulation of the \(k\)-armed bandit problem are based on this “smart” exploration. We’ll consider the most common three in turn.

Random selection#

It’s relatively trivial in code to implement a strategy where the computer (or octopus) pulls each lever with equal probability. While it’s not necessarily, or frankly even often, going to be the most interesting or high-paying strategy (though it can be! sometimes the simple ones are surprisingly effective depending on the situation – in reinforcement learning as in life!), including a random strategy can be a good baseline by which to evaluate the performance of other strategies. Is a particular strategy any good? Well, if we don’t know the underlying distributions, we can at least evaluate whether it is better than random. (This is similar to how we evaluated accuracy in \(k\)-NN in terms of whether, at minimum, it was better than simply choosing a label at random in proportion to the distribution of labels.)

Epsilon-greedy#

Epsilon greedy, or E-Greedy, or \(\epsilon\)-Greedy, is a very common strategy, and is an example of a smart exploration strategy. It works as follows:

Initial exploration: randomly explore for some pre-period of time (usually a rather small proportion of the overall number of trials, but it doesn’t have to be)

Choose winner: Choose the machine with the highest initial score during the initial exploration period

Set epsilon value (\(\epsilon\)): choose a value between 0 and 1, e.g., 0.01; this will be the percentage of time you continue to explore

Exploit the winner but retain some exploration: For the remaining trials, pull the winning variant 1-\(\epsilon\) percent of the time (e.g., 90%), and explore randomly the remaining 10% of the time).

This strategy tends to work reliably well under a wide specification of the \(k\)-armed bandit problem.

Upper Confidence Bound (UCB)#

This is also known as an “optimistic” strategy. The idea here is to take into account not just the mean payoffs of each machine, but also its distributions. We first explore randomly and look for the variant with the highest upper bound (as opposed to highest mean payoff) and then we exploit that one. It’s optimistic because the strategy favors actions with the potential to have the highest optimal value. Of course, depending on the underlying circumstances, this may or may not be an optimal strategy. (Again, you can think of this in the real world – UCB is like choosing to be an actor or an NBA player with a high upper-bound salary rather than a more conservative career with a lower upper bound but more predictable and at least decent mean salary).

Which strategy is best?#

We can simulate all of this in python and end up with results that indicate which strategy will average the highest payoffs, which we’ll do in the next section.